January 30, 2024

Exploring the Distinctions Between GPUs and CPUs

Initially devised for graphics rendering in gaming and animation, GPUs now find applications well beyond their initial scope.

A CPU, or central processing unit, serves as the primary computational hub in a server, handling diverse computing tasks crucial for the operation of the operating system and applications. In comparison, a graphics processing unit (GPU) is a specialized hardware component designed to efficiently execute intricate mathematical operations in parallel, outperforming a general-purpose CPU. Initially devised for graphics rendering in gaming and animation, GPUs now find applications well beyond their initial scope.

Key Differences: CPUs vs. GPUs

The advent of compute-intensive workloads related to computer graphics and animation exposed the limitations of CPUs. Tasks like video game animation demanded the processing of vast amounts of pixel data, causing performance issues with geometric mathematical calculations on CPUs at the time.

Recognizing the need to offload multimedia-oriented tasks, hardware manufacturers introduced GPUs. Today, GPUs excel at handling compute-intensive applications, such as machine learning and artificial intelligence, more efficiently than CPUs.

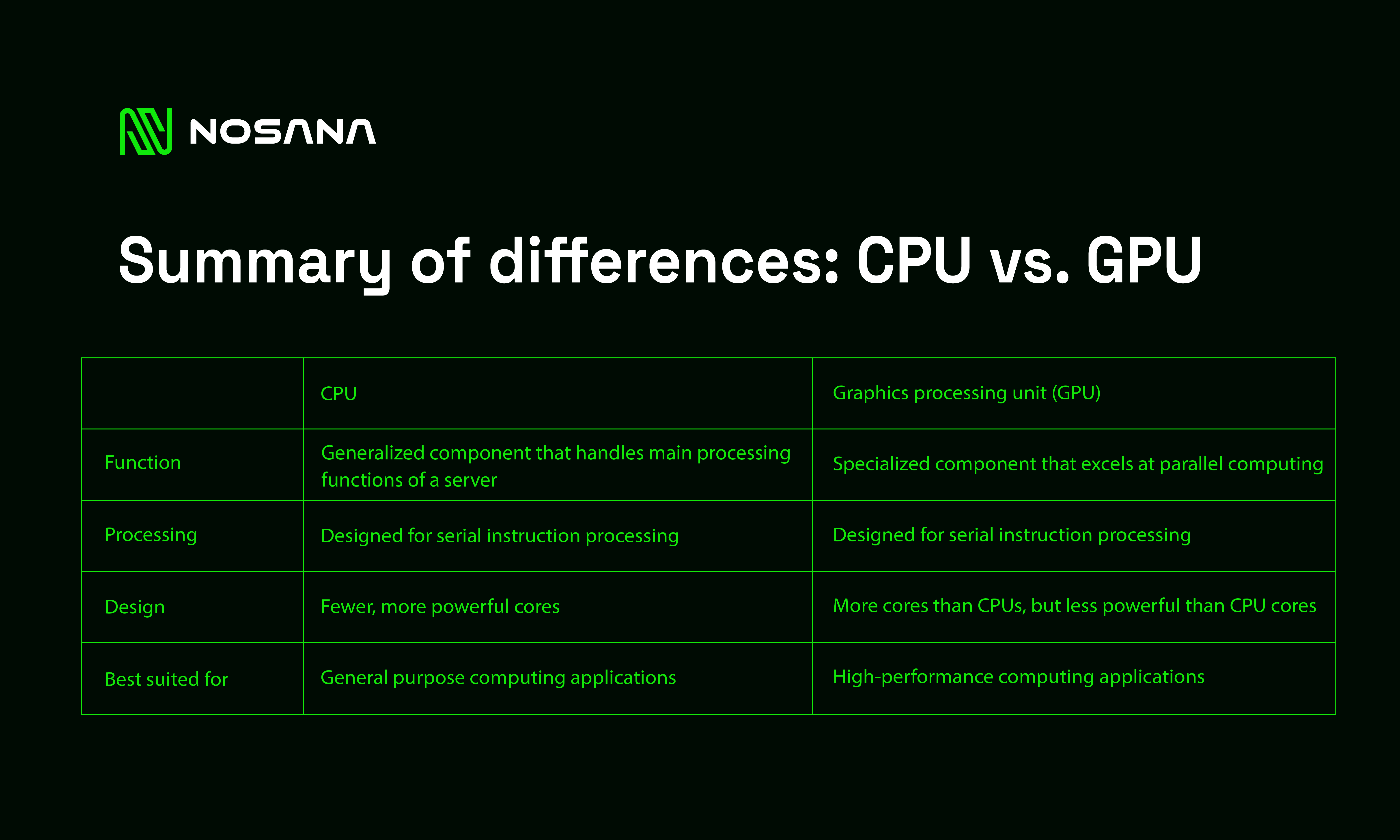

Function

The primary disparity between a CPU and GPU lies in their functions. A CPU is indispensable for server operation, managing all tasks necessary for the proper functioning of server software. Conversely, a GPU collaborates with the CPU to execute concurrent calculations. The GPU excels at completing simple, repetitive tasks swiftly by breaking them down into smaller components and processing them in parallel.

Design

GPUs stand out in parallel processing through numerous cores or arithmetic logic units (ALU). While GPU cores are less potent than CPU cores and have less memory, they play a pivotal role in parallel computing. Unlike CPUs, which swiftly switch between various instruction sets, GPUs efficiently process a high volume of the same instructions at high speed.

Illustrative Differences

To illustrate these differences, consider the analogy of a CPU as a head chef in a large restaurant responsible for flipping hundreds of burgers. Although the head chef could perform this task individually, it may not be the most efficient use of time. Interruptions or slowdowns in kitchen operations might occur. In contrast, a GPU is akin to a junior assistant with ten hands, proficient at flipping 100 burgers in 10 seconds, demonstrating the efficiency of parallel processing.

Similarities Shared by GPUs and CPUs

Both CPUs and GPUs are integral hardware units powering computing devices, akin to the brains behind the machine. They share common internal components, encompassing cores, memory, and control units.

Core

Both GPU and CPU architectures incorporate cores responsible for executing computations and logical functions. These cores retrieve instructions from memory in the form of digital signals (bits), decode them, and process them through logical gates within a defined instruction cycle. While CPUs traditionally had a single core, modern CPUs and GPUs commonly feature multiple cores.

Memory

Both CPUs and GPUs engage in millions of calculations per second, utilizing internal memory to enhance processing efficiency. Cache, the embedded memory facilitating rapid data access, is categorized as L1, L2, or L3 in CPUs, with L1 being the fastest and L3 the slowest. A memory management unit (MMU) governs data movement between the CPU core, cache, and RAM during each instruction cycle.

Control Unit

The control unit synchronizes processing tasks and determines the frequency of electric pulses generated by the processing unit. Higher-frequency CPUs and GPUs generally offer superior performance. However, variations in design and configuration make CPUs and GPUs suitable for different scenarios.

When to use GPUs over CPUs

When it comes to opting for GPUs over CPUs, it's important to recognize that it's not a matter of choosing one over the other. Every server or cloud server instance necessitates a CPU for its operation. However, certain servers go the extra mile by incorporating GPUs as supplementary coprocessors. The decision to use GPUs or CPUs depends on the specific workloads and their efficiency in handling certain functions.

Here are some examples where opting for GPUs over CPUs can be beneficial:

-

Deep Learning:

- Deep learning, a facet of artificial intelligence (AI), involves training computers to process data in a manner inspired by the human brain.

- GPU-based servers exhibit high-performance capabilities for tasks related to machine learning, neural networks, and deep learning. They excel in handling complex pattern recognition in images, text, sounds, and other data, leading to accurate insights and predictions.

-

High-Performance Computing:

- High-performance computing pertains to tasks demanding exceptionally high computational power.

- Examples include running geoscientific simulations, seismic processing, financial simulations for risk identification, hedging opportunities, and developing predictive data science applications in medicine, genomics, and drug discovery.

- GPU-based computer systems are better suited for efficiently handling these high-performance computing tasks.

-

Autonomous Vehicles:

- Developing and deploying advanced driver-assistance systems (ADAS) and autonomous vehicle (AV) systems necessitates highly scalable computing, storage, networking, and analytics technologies.

- Tasks such as data collection, labeling and annotation, map development, algorithm development, simulations, and verification require the robust support of GPU-based computer systems to operate efficiently.

How can Nosana put your GPUs to work?

Our decentralized GPU grid represents a new era in the world of computing. By harnessing the latent power of idle GPUs globally, our compute grid provides on-demand access to GPU resources that is both cost-effective but highly efficient.

Nosana empowers anyone with spare compute to contribute their idle processing power to our GPU grid. Doing so, you will make a significant impact on the advancement of AI projects, all while earning substantial rewards.

Ready to contribute your compute power to the grid?

Learn more docs.nosana.io/nodes/grid