January 11, 2024

Deep Learning Unveiled: Navigating Training, Inference, and the GPU Shortage Dilemma

Right now this field is facing a big problem: there aren't enough GPUs

Deep learning is one of the most important technologies in the rapidly changing field of artificial intelligence (AI). It drives new ideas in many areas. But right now, this field is facing a big problem: there aren't enough GPUs. This is affecting both training and inference, which are very important parts of AI growth. Innovative blockchain-based solutions like our GPU-compute grid are appearing, providing options that can be scaled up and are cost-effective.

Deep Learning Training vs. Inference: The Core of AI Models

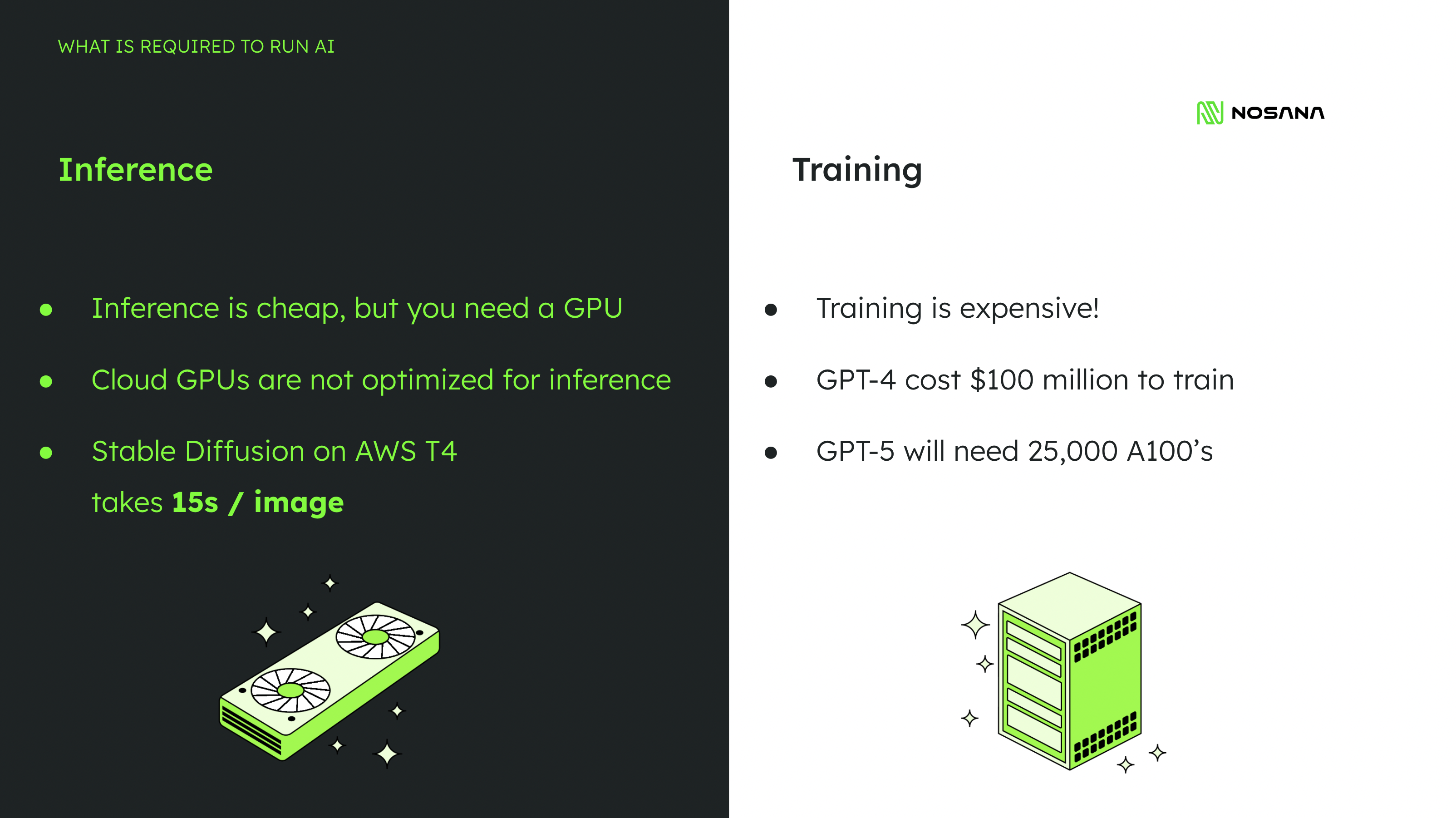

Training: Teaching Models to Learn Large datasets are used to build AI models—usually neural networks—through an intense process called deep learning training. By adjusting their internal parameters iteratively, the models narrow the difference between what they predict and what happens. Because of this stage's high computing demands, processing efficiently requires strong GPUs.

Inference: Applying Learned Knowledge

Models go through an inference step after training, where they apply their acquired patterns to fresh, untrained data. For real-world AI applications, inference is essential. It requires speed and efficiency, particularly in situations where decisions must be made quickly.

Exploring the Distinction

Understanding the dynamics between deep learning training and inference is pivotal to unraveling the intricacies of machine learning. While the two are interconnected, they serve distinct purposes, with training laying the foundation for the subsequent inference process.

Learning by Example: A Human Analogy

To grasp the essence of deep learning, envision how humans acquire a new skill. Whether through reading a manual, watching instructional videos, or observing someone proficient in the task, we learn by studying and mimicking. Similarly, deep neural networks (DNNs) in machine learning absorb patterns and draw inferences from existing data during the training phase.

Navigating Real-World Scenarios

In practical terms, consider a chatbot trained to understand and respond to user queries. During training, the chatbot learns from diverse conversations, gaining the ability to recognize patterns in language. In the real world, when faced with user inputs not explicitly covered in its training data—such as unconventional expressions or emerging slang—the chatbot relies on inference. This adaptive process allows it to generate meaningful responses even in unforeseen scenarios, showcasing the practical significance of both training and inference in AI.

Strategic Applications in Critical Domains

Beyond chatbots, the distinctions between AI training and inference play a vital role in critical domains such as autonomous vehicles, fraud detection, and healthcare. For instance, the real-time decision-making required in autonomous vehicles heavily relies on inference, ensuring immediate and accurate responses to dynamic environments. In healthcare, AI models trained on medical data can infer insights and aid in diagnostics.

Computational Needs and Cost Implications

Due to their superior processing power, GPUs have historically been used for both inference and training. However, as AI models become more complicated and data volumes increase, this dependence has become more noticeable. The recent shortage of GPUs has resulted in increased costs and limited availability due to increased demand from multiple sectors. This setting directly affects the cost and viability of AI projects, which makes it particularly difficult for AI development teams, especially those with low resources.

Blockchain-Based Solutions: A New Era in AI Development

To address these issues, we have constructed a decentralized GPU compute grid. Our platform makes AI inference more accessible and economical by distributing computational tasks over a network of GPUs using blockchain technology. Our decentralized strategy aims to provide a new paradigm in AI development while also contributing to the solution of the GPU shortage. Our goal is to increase the accessibility of computational resources so that more developers and entrepreneurs can work on AI research and development. Scalability and affordability are two major issues in AI development that our technology tackles. A dispersed network of resources guarantees that artificial intelligence projects can grow effectively without becoming unaffordable or sacrificing processing times—an essential feature for real-time AI applications.

The Interplay Between Training and Inference in the Context of Hardware Limitations

Training and inference have a synergistic relationship. The effectiveness of inference is directly influenced by the quality of training. Insights gained during the inference stage can be used to improve the training process, resulting in more accurate and efficient models. The present GPU shortage shows the vulnerability of AI development that relies on centralized hardware resources. These limits are driving a trend toward more inventive and distributed computational models, such as blockchain technology.

The investigation of deep learning training versus inference, together with the difficulties faced by the GPU shortage, highlights a watershed point in AI development. The introduction of blockchain-based solutions such as our GPU-compute grid is more than just a reaction to these issues; it is a forward-thinking approach that has the potential to reshape the landscape of AI development. Scalable, accessible, and cost-effective computational resources are critical for the advancement of technology, which in turn allows AI to advance, and we need a level playing field to stimulate innovation across all industries.

Want to learn more? Visit our Discord server to understand how you can get involved in what we're doing.